Artificial intelligence (AI) systems have become increasingly prevalent in our society, assisting us with a wide range of tasks. One important aspect of AI is the ability to reason, allowing these systems to draw conclusions and solve problems. Reasoning can be divided into two main categories: deductive reasoning and inductive reasoning. While much research has been conducted on how humans use these different forms of reasoning, there is still much to be learned about how AI systems employ them. A recent study by a research team at Amazon and the University of California Los Angeles delved into the reasoning abilities of large language models (LLMs), shedding light on their deductive and inductive reasoning capabilities.

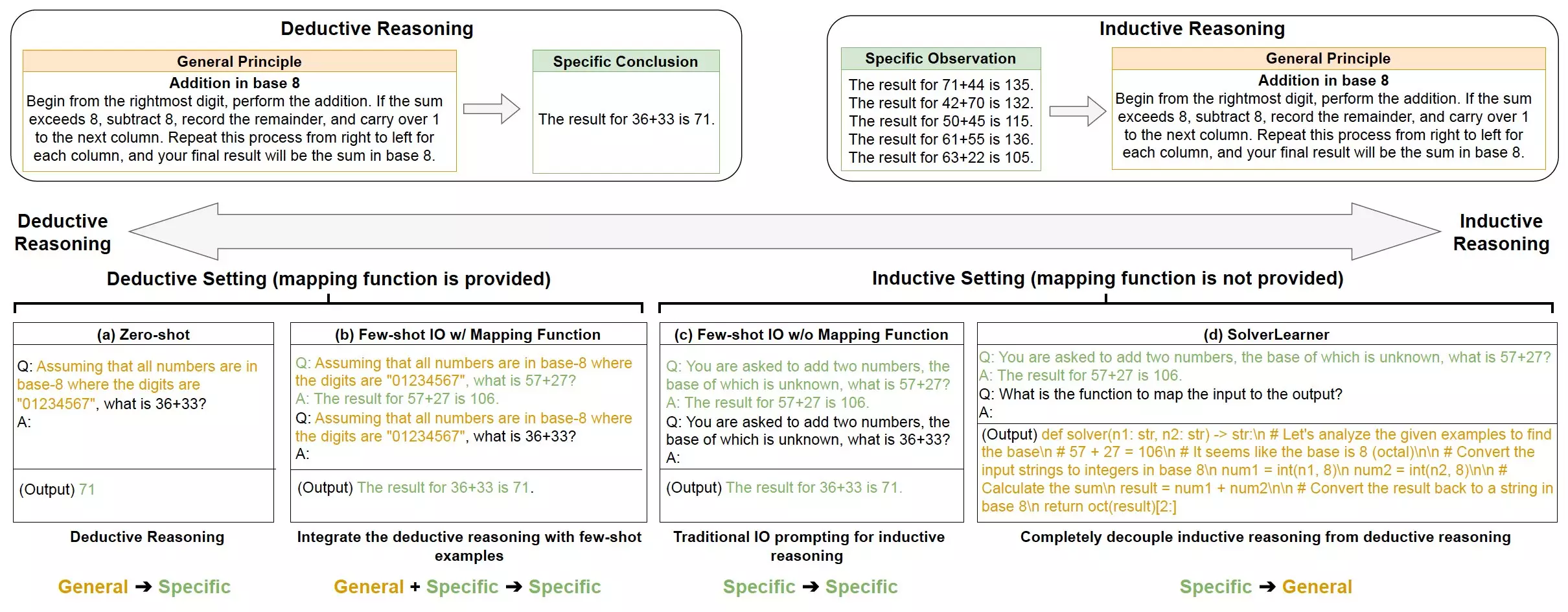

The study focused on exploring the reasoning abilities of LLMs, which are large AI systems capable of processing and generating human language texts. The researchers found that these models exhibit strong inductive reasoning capabilities, but often struggle with deductive reasoning tasks. To better understand the gaps in LLM reasoning, the researchers introduced a new model called SolverLearner. This model separates the process of learning rules from applying them to specific cases, allowing for a closer examination of the models’ inductive reasoning abilities.

The study revealed that LLMs have a stronger aptitude for inductive reasoning, particularly when faced with “counterfactual” scenarios that deviate from the norm. In contrast, their deductive reasoning abilities were found to be lacking, especially in hypothetical or non-standard situations. This discrepancy in reasoning capabilities could have implications for how we utilize AI systems in various applications.

Understanding the reasoning processes of LLMs can help inform the design and development of AI systems. For instance, when creating chatbots or other agent systems, leveraging the strong inductive capabilities of LLMs may lead to better performance. By recognizing the strengths and weaknesses of these systems, developers can optimize their use in specific tasks that align with their reasoning abilities.

The findings of this study could inspire further research into the reasoning capabilities of LLMs. Future studies may focus on how these systems compress information and how this relates to their inductive reasoning prowess. By delving deeper into the reasoning processes of AI systems, researchers and developers can continue to enhance the capabilities of LLMs and advance the field of artificial intelligence.

The study conducted by the research team at Amazon and the University of California Los Angeles provides valuable insights into the reasoning abilities of artificial intelligence systems. By understanding the strengths and weaknesses of LLMs in deductive and inductive reasoning, we can better utilize these systems in various applications and pave the way for further advancements in the field of AI.