Quantum computing and simulation represent a frontier of modern physics and technology, particularly due to their potential to outperform classical computing systems in solving complex problems. At the forefront of this research are scientists from institutions like Freie Universität Berlin, University of Maryland, and various partners including Google AI and NIST. Their recent work, which revolves around improving the precision of quantum simulations, particularly focusing on a superconducting quantum simulator, highlights the importance of accurately estimating Hamiltonian parameters—a key aspect in understanding the dynamics of quantum systems.

The focus of this collaborative study was to develop robust methodologies for measuring the Hamiltonian parameters necessary for the effective functioning of a superconducting quantum simulator. This interdisciplinary effort showcases how both theoretical frameworks and practical experimentation can synergize to propel quantum technologies forward.

The journey to enhancing Hamiltonian learning techniques began serendipitously when Jens Eisert received an urgent call from colleagues attempting to calibrate their Sycamore quantum processor. Initially, this task appeared straightforward; however, the intricacies involved quickly revealed a more complex landscape. Identifying unknown Hamiltonians from empirical data produced by the quantum system proved much more labor-intensive than anticipated.

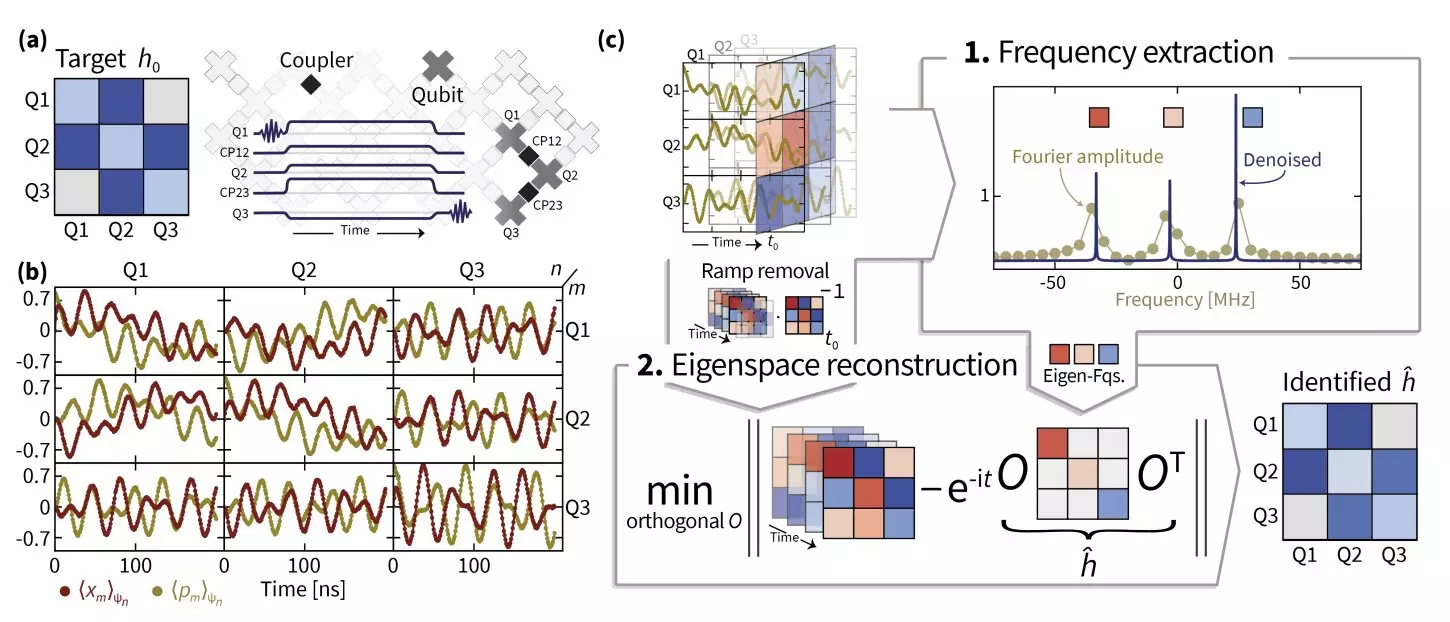

Eisert’s introspections led to the recruitment of talented Ph.D. students Ingo Roth and Dominik Hangleiter, who together devised strategies using superresolution concepts. Superresolution is a technique aimed at improving the fidelity of eigenvalue estimations, crucial for recovering accurate Hamiltonian frequencies. However, while the theoretical simplicity was alluring, the practical application necessitated years of collaborative effort to enhance the robustness of the Hamiltonian learning protocols.

Central to their eventual success was the joint deployment of various sophisticated mathematical techniques. The method of manifold optimization played a vital role, helping to navigate the complexities associated with Hamiltonian operators that did not exist neatly in Euclidean space. This advanced optimization approach allowed researchers to identify the eigenspaces accurately, enhancing the quality of insights extracted from the data.

The trials the researchers encountered underscored the nuanced nature of Hamiltonian systems. Traditional notions of instantaneous and unitary transitions fell short, as real quantum processes are often imperfect and evolve in unexpected ways. Eisert’s team recognized the necessity of employing signal processing methods, including the newly developed TensorEsprit algorithm, giving them a newfound ability to analyze data more effectively, even as system scales increased.

Through rigorous testing, the researchers successfully managed to identify Hamiltonian parameters in systems composed of up to 14 superconducting qubits. Their findings hold immense promise, suggesting that their advanced methodologies can be translated onto larger quantum processors. This breakthrough establishes a framework that could inspire future innovation in the characterization of Hamiltonians across quantum systems.

Moreover, the implications of their work extend beyond the theoretical realm. By establishing robust techniques for Hamiltonian learning, the study contributes directly to the ongoing development of quantum technologies, paving the way for precision modeling in future quantum simulations. These simulations are expected to offer significant insights into complex quantum systems and materials, recreating intricate interactions in controlled laboratory environments.

Looking ahead, Eisert and his collaborators are eager to apply their methods to a broader array of quantum systems, including those involving interactions among particles and systems composed of cold atoms. These explorations are crucial, as they address longstanding questions about the very nature of Hamiltonians that govern quantum systems—questions often overlooked due to an assumption that Hamiltonians are immediately known.

Moreover, as they delve deeper into this pivotal aspect of quantum mechanics, the team aims to further refine their methodologies for practical, large-scale applications. In essence, they seek to bridge the gap between theoretical inquiry and experimental realization, thereby enhancing our predictive capabilities in quantum mechanics.

The work being pursued at the intersections of theory and practice not only advances our understanding of Hamiltonians but also fosters the development of technologies that can reshape our approaches to quantum simulation and computation. By addressing the complexities of Hamiltonian learning and providing new tools for understanding quantum dynamics, researchers are laying down the groundwork for future explorations into the quantum realm—ultimately enhancing our predictive power and technological capabilities in an increasingly quantum world. The future of quantum simulation rests on such innovative research that continues to challenge existing paradigms, propelling the field into uncharted territories.