In the stellar realm of artificial intelligence, the evolution of large language models (LLMs) has opened doors to unprecedented levels of problem-solving and information retrieval. Yet, despite their capabilities, these models often face challenges when tackling complex inquiries or specialized knowledge domains. In response, researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have proposed an innovative solution in the form of Co-LLM, an algorithm designed to facilitate collaboration between general-purpose language models and specialized counterparts. This cooperative mechanism not only enhances the accuracy of responses but also streamlines the generation process, creating a synergy reminiscent of human collaboration.

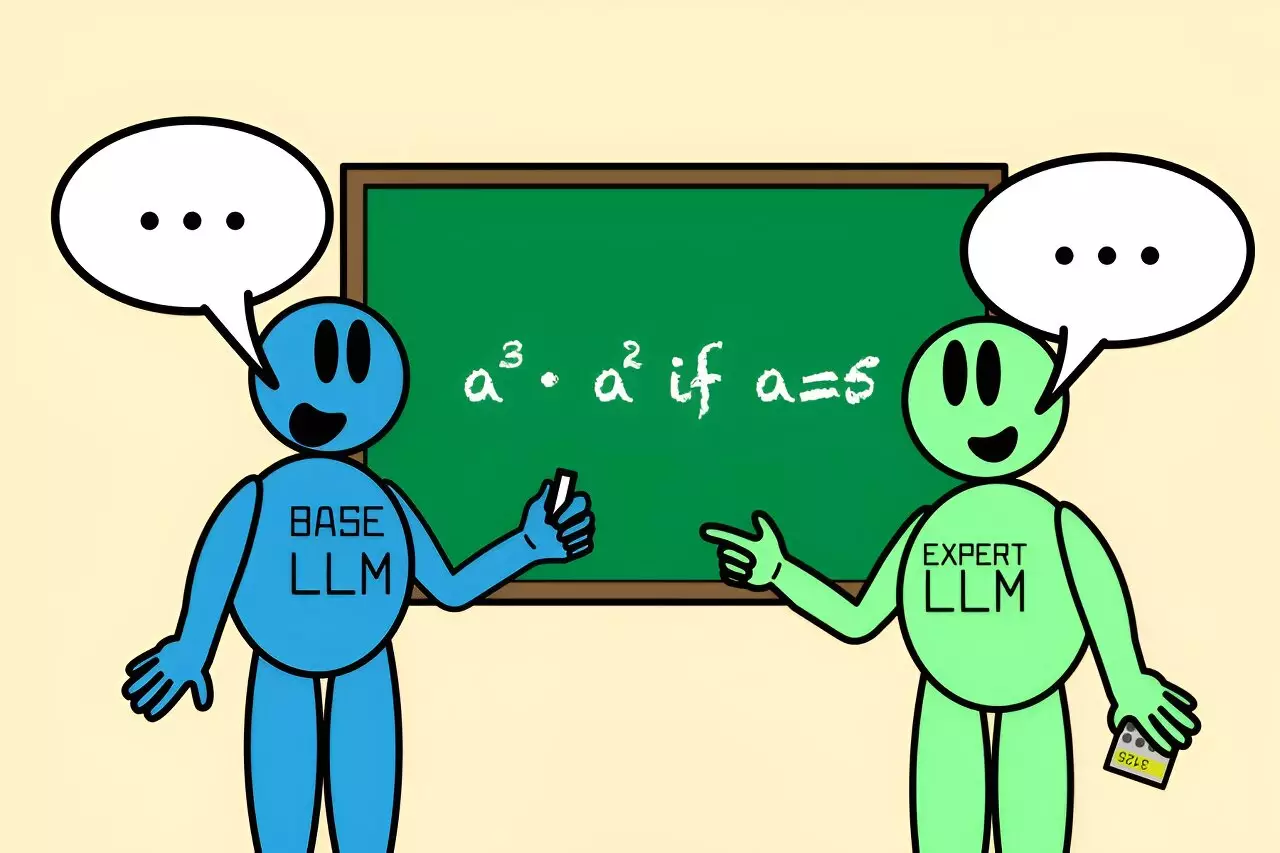

Traditionally, deploying LLMs has relied heavily on either comprehensive training with extensive datasets or complex formulaic approaches that outline specific conditions for model cooperation. However, the team at MIT has reimagined this dynamic through Co-LLM. At its core, the algorithm orchestrates a partnership between a general-purpose LLM and a domain-specific model, allowing them to work in tandem. When faced with a question, the general-purpose model drafts an initial answer. Co-LLM then meticulously assesses each word of this response, determining when it’s advantageous to consult the specialized model for an improved answer.

This dual-model approach marks a significant leap forward from earlier methodologies. Unlike prior techniques that mandated simultaneous operation of both models, Co-LLM employs a tactical “switch variable.” This variable functions akin to a project manager, orchestrating the interaction between the models by pinpointing which components of the general model’s output require expert input. For instance, if the general LLM is asked to identify extinct bear species, Co-LLM smartly incorporates precise details—such as extinction dates—from the specialized model, resulting in a richer and more accurate response.

One of the remarkable advantages of the Co-LLM algorithm is its efficiency. In typical scenarios, relying solely on a specialized model often leads to a prolonged response time. However, the clever use of the switch variable ensures that expert models are only called into action when necessary. This means that the majority of the response generation can occur within the more adept but broader general-purpose model, preserving both speed and resource efficiency.

Moreover, the algorithm’s ability to learn organically parallels human behavior; just as people instinctively seek expertise surrounding specialized topics, Co-LLM instills this same intuition into the language models. By utilizing domain-specific datasets, the general LLM becomes accustomed to understanding when its responses lack robustness or precision, prompting it to seek aid from the specialized model seamlessly.

The versatility of Co-LLM finds practical implications across various sectors, particularly those necessitating accurate and detailed information—such as healthcare and education. For example, when challenged with inquiries concerning pharmaceutical compositions, the algorithm ensures that the general model consults a specialized biomedical LLM to confirm the accuracy of its response. Similarly, in mathematical contexts, Co-LLM switches to a model proficient in numerical logic when faced with complex equations, effectively mitigating the occurrence of calculation errors.

Through rigorous testing using datasets like the BioASQ medical benchmark, Co-LLM has repeatedly demonstrated superior accuracy. The framework transcends the prior limitations of independently fine-tuned or specialized models, often achieving more reliable results by synergizing their respective strengths. This unmatched collaboration ushers in a new era of performance metrics for LLMs—one that champions both expertise and efficiency.

Looking ahead, the researchers envision exciting possibilities for Co-LLM. Enhancements could include a robust mechanism for self-correction, allowing the algorithm to backtrack and rectify instances where the specialized model provides inaccurate outputs. This adaptability could further bolster the reliability of AI responses, mirroring the flexibility of human collaboration in problem-solving scenarios.

Additional aspirations involve continuously updating the specialized models as new knowledge becomes available. This capability would ensure that LLMs maintain relevance in fast-aiding fields, such as medicine, where outdated information can have significant repercussions. Ultimately, the Co-LLM’s potential extends into real-world applications beyond mere information retrieval—letters, reports, and documents can benefit from real-time updates, integrating the latest developments with underlying logical accuracy.

Co-LLM represents a groundbreaking shift in how language models approach collaboration. By enabling models to “phone a friend,” the algorithm challenges the traditional boundaries of independent model operation and introduces a nuanced framework that mirrors human expertise seeking. Given the multifaceted nature of information and commonalities of human learning, the future of AI may well revolve around such synergistic approaches that redefine not just accuracy, but the very nature of knowledge acquisition in the digital age. As Co-LLM continues to unfold in research and application, it underscores a pivotal evolution in language comprehension technology, setting a precedent for how artificial intelligence can genuinely augment human understanding.