Recent developments in speech emotion recognition (SER) have underscored the vast potential of deep learning technologies to transform emotional analysis across diverse applications. From enhancing human-computer interaction to improving mental health diagnostics, the implications are profound. Nonetheless, with innovation comes risk; these increasingly sophisticated models are not immune to vulnerabilities, particularly against adversarial attacks. This article delves into the findings from a comprehensive study conducted by researchers at the University of Milan, examining how various forms of adversarial intrusions impact SER across different languages and gender-specific characteristics.

The study in question, published in *Intelligent Computing* on May 27, provides a meticulous analysis of how SER models, particularly convolutional neural networks combined with long short-term memory (CNN-LSTM) architectures, react under assault from adversarial examples. These examples are ingeniously crafted inputs designed to provoke incorrect outputs from the models. The research presents evidence that these models are remarkably prone to white-box and black-box attacks, both of which significantly degrade their performance. Such vulnerabilities raise alarming implications; a compromised SER system could lead to erroneous emotional assessments, thereby triggering potentially serious consequences in real-world applications ranging from security systems to mental health evaluations.

To investigate the attacks, the researchers evaluated three distinct datasets: EmoDB for German, EMOVO for Italian, and RAVDESS for English. They implemented a diverse array of both white-box and black-box attacks, utilizing advanced methods like the Fast Gradient Sign Method and Carlini and Wagner attack for the former, while employing One-Pixel Attack and Boundary Attack for the latter. Interestingly, the results revealed that black-box attacks, particularly the Boundary Attack, were remarkably effective and, in some cases, even outperformed white-box attacks. This raises critical questions about the efficacy of traditional defenses and indicates that even rudimentary observations of an SER model’s outputs could potentially empower attackers without an in-depth understanding of the underlying architecture.

The research also adopted a unique gender-focused lens to assess the impact of adversarial attacks on speech emotional recognition, noting subtle differences between male and female speech samples. The analysis indicated marginally lowered accuracy within male samples concerning white-box attacks. However, discrepancies in performance across genders were relatively minor, suggesting a consistent vulnerability landscape. Additionally, while English was the language most susceptible to adversarial manipulations, Italian demonstrated remarkable resilience. This nuanced examination offers critical insights into how gender and language nuances can influence the robustness of SER models and emphasizes the need for tailored approaches in developing defenses.

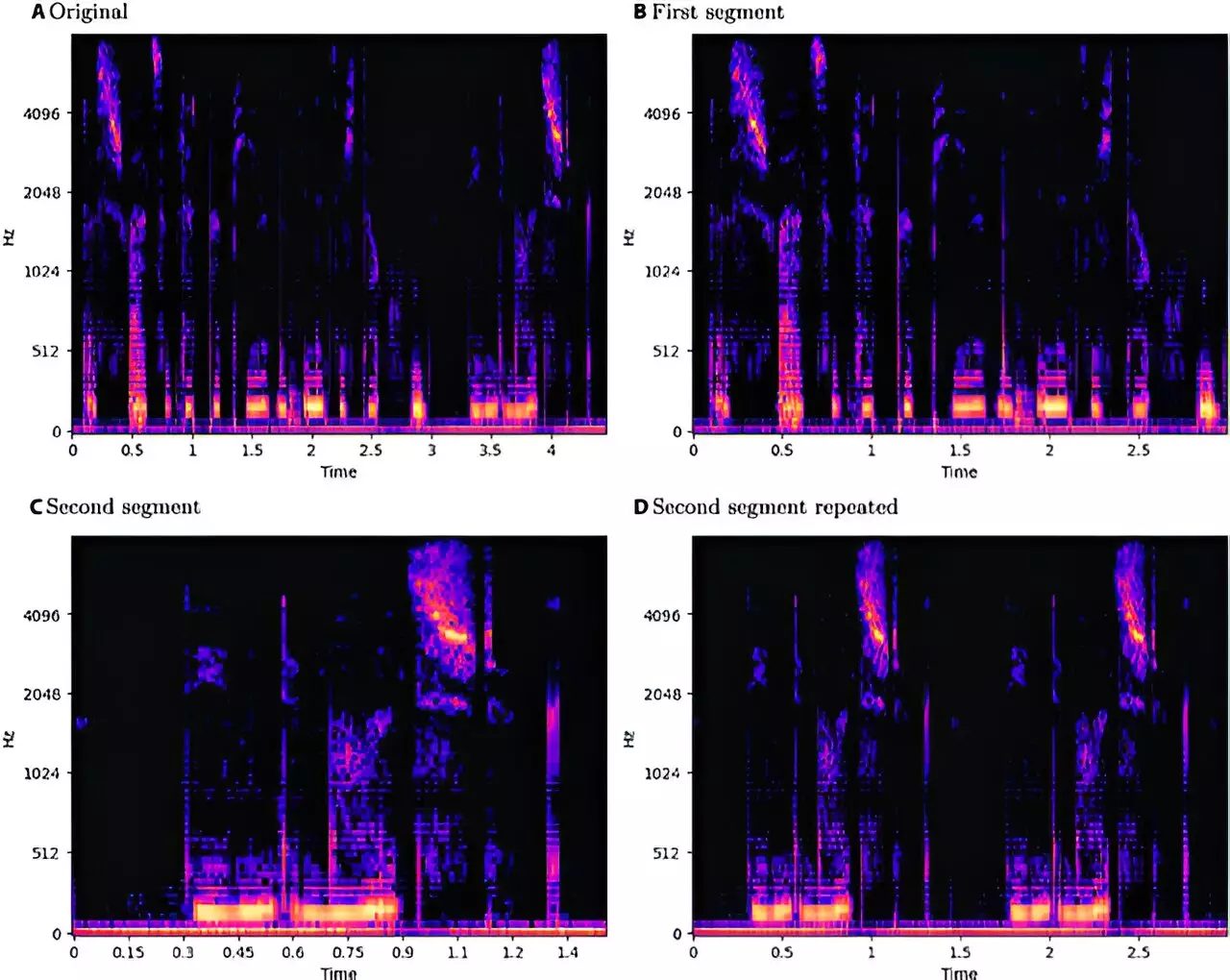

The researchers put forth a structured methodology for audio data processing that harmonizes with the CNN-LSTM framework used throughout their experiments, ensuring a consistent and standardized evaluation process. Techniques like pitch shifting and time stretching were employed in augmenting the datasets, while maintaining a strict sample duration. This commitment to methodological rigor enhances the reliability of their findings, establishing a framework for future research that could equally mitigate the identified vulnerabilities.

An intriguing component of the study revolves around the philosophical debate regarding the distribution of findings in adversarial machine learning. While revealing vulnerabilities could furnish attackers with insights, withholding such information might hinder the development of robust defenses. Transparency allows for a collaborative effort in understanding weaknesses, fostering an environment where practitioners can effectively secure their models against potential exploitation. By disseminating knowledge about vulnerabilities, researchers provide a pathway for continuous improvement and innovation within the technological sphere.

The findings from the University of Milan’s research present a critical viewpoint on the vulnerabilities of speech emotion recognition models. As technology evolves, understanding and addressing these weaknesses must become a priority to secure practical applications. The interplay between research transparency and the development of adversarial defenses could help carve a path toward a more resilient and secure landscape in emotion recognition technologies. In doing so, we can harness their full potential while safeguarding against both intentional exploitation and accidental misinterpretation.