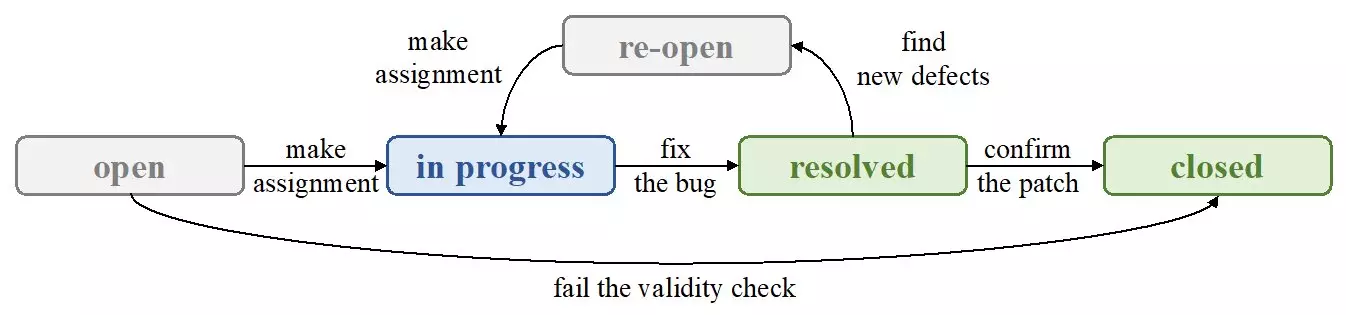

Automatic bug assignment has emerged as a critical area in software engineering, especially as the complexity of software projects has grown. For developers, textual bug reports often convey the necessary information to diagnose and resolve issues effectively. However, relying solely on textual data can produce significant complications. Historically, the approach involved classical Natural Language Processing (NLP) techniques, which, while foundational, have shown limitations in effectively interpreting the nuances of bug descriptions. Recent research has revealed that this technique may overlook critical elements, leading to inefficiencies in bug assignment processes.

Insights from Recent Research

A notable study led by Zexuan Li and published in the *Frontiers of Computer Science* touches upon this dilemma. The research sought to explore the capabilities of an NLP technique known as TextCNN, which aims to improve the processing of textual features within bug reports. Surprisingly, their findings indicate that even with advanced methodologies, textual features do not consistently outperform their nominal counterparts. This denotes a potential paradigm shift toward understanding feature significance in bug assignment systems.

The research team identified that nominal features—attributes that reflect the developer’s preferences—were crucial in making bug assignment more efficient. They employed rigorous methods to analyze which features significantly influenced bug assignment and defined the qualitative advantages of nominal features over textual data. What stands out here is the heuristic understanding that nominal features, which provide succinct and quantifiable information about a developer’s expertise or preferences, can streamline the classification process more effectively than long, convoluted textual inputs.

Methodology and Experimental Findings

In their approach, Li and his team posed three pivotal questions: How well do textual features perform with advanced NLP tools? What is the role of influential features in bug assignment, and how can these features enhance model performance? Their methodology revolved around reproducing the TextCNN model, applying a wrapper method, and leveraging a bidirectional strategy for feature selection. The research employed classifiers such as Decision Tree and Support Vector Machine (SVM), testing multiple datasets across projects of varying sizes and characteristics.

The results were revealing—showing that while improved NLP techniques yield some benefits, reliance on nominal features often results in higher accuracy ranging from 11% to 25%. Such performance metrics challenge the traditional perception prioritizing textual analysis. Instead, they emphasize the importance of systematically selecting and analyzing feature types suited for specific predictive tasks.

Implications for Future Development

Looking forward, the implications of this research extend beyond mere improvements in accuracy. It propels a discussion about the integration of nominal features into machine learning frameworks. The study suggests that by building knowledge graphs that associate these features with related descriptive terms, it’s possible to create more nuanced embeddings. This could unlock newer avenues for bug assignment capabilities, optimizing workflows in software engineering, and improving overall software quality.

As the field of automatic bug assignment progresses, embracing these findings will be vital for enhancing development efficiency and the robustness of software products.